Compute Canada Notes

slurm general

A clarification between srun, sbatch, salloc

The srun is for single command execute.

The sbatch will let slurm take care of the standard output, suitable for long-term tasks

The salloc allocate node (CPU, GPU etc.) for interactive operation.

Official Machine Learing Courses

Deploy your virtual environment and submit a job

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# check available packages module avail $Package_Name # create virtual environment virtualenv --no-download ~/YOUR_VENV_NAME # actiavte virtual environment source ~/VENV/bin/activate # load required environment module load python module load scipy-stack # update pip pip install --upgrade pip # check available wheels on the cluster to aviod repetitive downloading avail_wheels torch # use '--no-index' to use the server offered packages pip install --no-index torch # search on compute canada wheel warehouse # check installation pip list | grep torch |

NB: load does not mean package installed, in jupyter, you have to run pip3 install --no-index $pacakge_name.

Job submission requires a bash script file.

A example script is shown:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

#!/bin/bash #SBATCH --account= #SBATCH --ntasks=2 #SBATCH --nodes=1 #SBATCH --gres=gpu:2 #SBATCH --cpus-per-task=3 #SBATCH --mem=10G #SBATCH --time=00:30:00 #SBATCH -o outlog-%j.out #SBATCH --job-name=my-named-job #SBATCH --mail-user=your.email@example.com // this is for email notification #SBATCH --mail-type=ALL module load python module load scipy-stack source ~/VENV/bin/activate cd ~/MNIST srun python ~/MNIST/mnist.py |

Then submit the script by:

|

1 |

sbatch YOUR_SUBMIT_SCRIPT.sh |

python package and environment

For cedar, required python package may need to be installed by

|

1 |

python -m pip install $PACKAGE |

Another thing is Graham can be the most responsive cluster since it is held by Univ of Waterloo.

Note the cluster distribution. Cedar is in BC, and Graham also support Jupyter now.

Real-time python output to file

For server running code. Recommend real-time output

|

1 2 |

with open('somefile.txt', 'a') as your_file: your_file.write('Hello World\n') |

file storage

{Official Doc Link}

Scratch has 20TB but file older than 60 days will be purged. Project has 1TB and don’t get purged. Best implementation is do intensive read-write on scratch and back-up on project. (For search index purpose, leave expired, expiry, expiring here.)

A email will be send to user before purge

To locate the files in purge warning:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# -atime specify time stamp, +60 means created 60days ago or longer, 30 means exactly 30 days # > purge...txt means redirect output to the txt file for next step filter find /scratch/YOUR_USER_NAME -atime +59 -ls > purge_warn_ls.txt # frst awk print last field by specify $NF then pass to next stage by | # second awk split directory by '/' and output field 2,3,4,5. # uniq return the unique items from previoius stage # so we can know which fold our purge-warning locate. awk '{print $NF}' purge_warn_ls.txt |awk -F'/' '{print $2,$3,$4, $5}' | uniq # batch update the files # just touch all files in the purge list cat purge_warn_ls_59.txt| awk '{print $NF}' | xargs -n1 touch # to actively remove the file, can use # -v is inverse match ls | grep -v "THE_PATTERN_OF_REMAIN_FILES" | xargs rm |

file transfer

- To dropbox: https://riptutorial.com/dropbox-api

- Nextcloud by Compute Canada: https://docs.alliancecan.ca/wiki/Nextcloud

Go to bottom there are 2 command lines for this.

|

1 2 3 4 |

# file upload curl -k -u <username> -T <filename> https://nextcloud.computecanada.ca/remote.php/webdav/ # file download curl -k -u <username> https://nextcloud.computecanada.ca/remote.php/webdav/<filename> -o <filename> |

- Cloud Local: https://docs.alliancecan.ca/wiki/Transferring_data#From_the_World_Wide_Web

baiscally usingsftp

matlab

matlab on the Compute Canada cluster requires:

|

1 2 3 4 5 6 |

# load module module load matlab # test license availabiliy, ok if return a number serial matlab -nodisplay -nojvm -batch disp(license()) # run in command line mode matlab -nodisplay -nojvm |

Since the RAM-greedy nature of MatLab, salloc is usually used ahead of running it.

check submitted slurm requests:

|

1 |

sacct |

It seems jobs can use complete node (node mode) or partial node (task-mode).

{Ref-Official Doc}

Also can do job array for sequential jobs, or parallel jobs with MPI.

Here is a PDF introducing the job submission and scheduling regulations:

{Link}

An example alloc request is below:

|

1 |

salloc --time=DD-HH:MM --mem-per-cpu=<number>G --ntasks=<number> --account=<your_account> |

tips on Graham

Official doc

Includes many handy customize functions.

https://wiki.math.uwaterloo.ca/fluidswiki/index.php?title=Graham_Tips#Virtual_Desktop

request GPU

Official doc {Link} gives example of:

- gpu one 1 node

- task-orientated multi-gpu

- MPI muliti-threading

An example of GPU request is shown below:

|

1 2 |

salloc --account=<account name> --mem=8G --time=3:00:00 -J <job name> --nodes=1 --gpus-per-no de=p100:1 |

I am using single node requesting. But the task-orientated request and multi-threading are alluring. May do task-orientated soon.

screen

{Doc}

NB: window and region is the display region, screen or bash is the running bash

- start with

ctrl+ato command mode - vertical split region:

|; - horizontal split region:

h - canel split region:

Q - switch region:

Tab - activate or switch bash

ctrl+a - change bash title

A - switch to certain bash

num - command mode:

:focus rightfocus on right partresizechange size, can also doctrl+-andctrl++_for fast decrease and increase size- save layout

layout dump .my_filename - reload layout

source .my_filename - set as default

echo source .my_filename >> ~/.screenrc

A example workflow:

ctrl+a+|orSsplit regions- create new screen by

ctrl a cor activate by doublectrl a - change title by

ctrl a A

job scheduling

Ref: {official doc}

check remaining quota (user limits)

use sshare -A def-<account>_<cpu|gpu> -l -U <user> to check user limits. Replace <> with your user name, and <cpu|gpu> means choose either of them. This is tricky as there are actually two separate accounts for cpu and gpu jobs.

The EffectvUsage column tells the used proportion. A low EffectvUsage usually comes with a high LevelFS indicating high priority.

The partitiion-status command should return the load of each node, however, not working on Cedar.

to minimize wait time

The official doc {Link} suggests less than 3 hours allocation requests tend to get instant responses.

My experience on Graham is set –time=3:00:00 almost get queued immediately.

The full run time level are:

- 3 hours or less

- 12 hours or less

- 24 hours (1 day) or less

- 72 hours (3 days) or less

- 7 days or less

- 28 days or less

The official instructions:

- Specify the job runtime only slightly (~10-20%) larger than the estimated value.

- Only ask for the memory your code will actually need (with a bit of a cushion).

- Minimize the number of node constraints.

- Do not package what is essentially a bunch of serial jobs into a parallel (MPI/threaded) job – it is much faster to schedule many independent serial jobs than a

single parallel job using the same number of cpu cores.

Some handy command line combo

|

1 2 3 4 |

# batch rename with for for i in *; do echo mv -i $i ${i::-5}; done # sort by file size ll -Shl FILE_NAME_PATTERN | awk {NR>1'print $NF, $5'} |

match pattern and print the next few lines

|

1 |

awk '/(sensors =)/{x=NR+9}(NR<=x){print}/File name/{print}' info_log.txt |

remove echo to do actually rename

connect to allocated nodes

{Official Doc-Attach to a running job}

|

1 |

srun --jobid JOB_ID --pty tmux |

The tmux is a screen-like software for multi-screen usage.

The Cheat Sheet of tmux: {Ref}

check job status

And check the progress by:

|

1 2 3 4 5 |

squeue -u $USER # or short code sq -u $USER # cancel a job scancel -JOB_ID |

Jobs can have 3 status:

CGjob completedPDpending, followed by reason (Resources, Priority, ReqNodeNotAvail) {Ref}Rrunning

Ref: SHARCNET official course series {Dashboard_Link} {ML_Intro}, {Scheduler}

tensorflow deployment

The main challenge is numpy&tensorflow compatibility.

The following scheme works for now (2023/03/28) link

Official doc {Link}

tensorboard: interactive, visualized probing

Motivation: It requires a quick visualization for the increasing workload in model profiling, especially with usage of transfer learning.

Official wiki: {Link}

Recommend connect to a running node before using. You may found this operation in the above section.

Start tensorboard with command below. Default port is 6006, use a different port to avoid interference. –load_fast seems to be a compute canada specified option.

|

1 |

tensorboard --logdir=<your_log_dir> --host 0.0.0.0 --load_fast false --port=6008 |

Then bind your local port with the remote port to visit.

|

1 |

ssh -N -f -L localhost:localport:computenode:6006 userid@cluster.computecanada.ca |

iPython

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# auto-completion {Tab} # see doc of the function help(YOUR_FUNCTION) # load venv in jupyter conda install ipykernel / pip install ipykernel # user means only install for current user # ENV_NAME should be consistent with the venv created by virtualenv # a typical display name can be 'Python(ENV_NAME)' python -m ipykernel install --user --name ENV_NAME --display-name DISPLAY_NAME |

zip

{Ref}

|

1 2 3 4 5 6 7 8 |

# zip a folder(s) into .zip file zip -r archivename.zip directory_name # zip serveral files into 1 zip file zip achivename.zip file1 file2 file... # assign zip level from 0 to 9; -0 is no compression, -9 is maximal compression zip -0 -r archivename.zip directory_name # encrypted zip zip -e -r archivename.zip directory_name |

ML 101

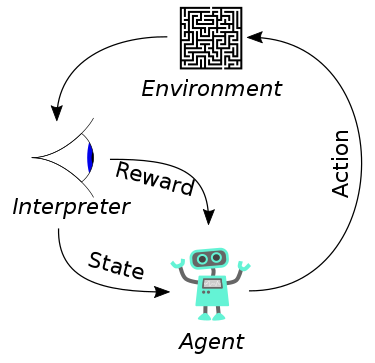

Reinforcement Learning

From wiki

It doesn\’t need paired labels. The practitioner only need to mark the results generated by the model.

scheduler run MNIST with GPU

Reading man of sbatch

-

--contraintcan specify the cpu gpu features. -

if use win notepad++ write bash scripts, replace all \’\r\n\’ to \’\r\’ before run on linux

-

sbatch submit account only accept

def-bingqliso far -

need to install on your own environment ahead of time if certain package required. Such as torchvision, torchtext, torchaudio:

1pip install --no-index torchvision -

see this page for jupyterHub on clusters:

https://docs.computecanada.ca/wiki/JupyterHub -

check available wheels here

https://docs.computecanada.ca/wiki/Available_Python_wheels -

Check jobs in queue

123squeue -u $USER# orsq -u $USER

parallel computing

- Only parallize task longer than 1e-4s

- Check the flow by timer, not guessing

Windows Users

参考{知乎-保姆级入门教程}

包括:

- 载入python

- 构建虚拟环境

- 批量安装所需包

还有第二弹{Job Submission}:

主打多任务自动提交

目前已经成功接入graham

查看官网说明,似乎有推荐的数据结构

Storage&File Management

官方教程中有大多数操作文件操作的说明:

{FAQ}

Visual exploration of Data by SHARCNET: {Youtube}

Highlight:

- df.groupby.().plot(kind=\’hist\’)

- seaborn PCA plotting, etc.

- interactive matplotlib (hide data)

- create your own python web app with bohec

Come back when you need to visualize your data.

free WebDAV from NextCloud

NextCloud is a cloud disk service hold by compute canada as well. Each user/group has 100GB quota.

I personally use it for Zotero. To deploy the WebDAV, simply log into nextcloud then click the settings on bottom left and copy the WebDAV url paste it in the required url cell in other softwares. Username and password are the same as your nextcloud one.